In recent years, efficiently deploying machine learning models as web applications and APIs has become increasingly important in making these models accessible and user-friendly. This guide explores the deployment of a previously trained butterfly recognition model. I will demonstrate how to deploy the model as an API and host it as a Flask web application on MS Azure.

Link to Github

Saving the Model as ONNX

The model is first saved in the ONNX format. ONNX is a standardized format for representing machine learning models, which offers several advantages:

- Speed: ONNX models are optimized for performance, leading to much faster inference times compared to other formats.

- Interoperability: ONNX supports models from various frameworks, making it easier to integrate with different platforms.

- Reduced Model Size: ONNX models are typically smaller, which reduces storage requirements and improves loading times.

To convert the existing model to ONNX, I used the following code:

import tensorflow as tf

import tf2onnx

# Load your TensorFlow model

model = tf.keras.models.load_model('butterfly_model.h5')

# Convert the model to ONNX

model_proto, _ = tf2onnx.convert.from_keras(model, output_path="butterfly_model.onnx")

Deploying the Model on Azure Machine Learning

With the model converted to ONNX, the next step was deploying it on Azure. Here’s a step-by-step guide to setting up the deployment:

-

Set Up a Workspace in Azure ML: On the Azure Machine Learning portal, I create a new Machine Learning workspace.

-

Register the Model in the Workspace: The ONNX model was uploaded to the workspace and registered with the code below. This ensured that the model is stored securely and can be accessed during deployment.

from azureml.core import Workspace, Model ws = Workspace( subscription_id='[AZURE ACCOUNT SUBSCRIBTION ID]', resource_group='[RESOURCE GROUP]', workspace_name='[WORKSPACE]' ) model = Model.register(workspace=ws, model_path="models/mobilenetv3_model.onnx", # Local path to the model file model_name="butterfly-recognition-model") # Name to register the model with -

Create an Endpoint for the Model: To deploy the model as an API, an endpoint needs to be created. This involves creating a

score.pyfile that includes instructions for initializing the model, preprocessing input data, and formatting predictions.import json import numpy as np import onnxruntime as ort from PIL import Image import base64 import io # initialize function def init(): global model model_path = os.path.join(os.getenv('AZUREML_MODEL_DIR'), 'butterfly_model.onnx') model = ort.InferenceSession(model_path) # preprocessing input and predicting def run(raw_data): try: data = json.loads(raw_data) image_data = base64.b64decode(data['image']) image = Image.open(io.BytesIO(image_data)) image = image.resize((224, 224)) image = np.array(image).astype('float32') image = np.expand_dims(image, axis=0) # Run inference outputs = model.run(None, {model.get_inputs()[0].name: image}) prediction = np.argmax(outputs[0]) return json.dumps({"prediction": int(prediction)}) except Exception as e: return json.dumps({"error": str(e)}) -

Define the Environment: Specify the environment for the deployment, including the Python version and required packages. This ensures that the deployment environment matches the development environment.

name: azureml_env dependencies: - python=3.10 - pip: - onnxruntime - numpy - pillow - azure-storage blob -

Deploy the Model: Deploy the model to an Azure service URI. This endpoint will accept JSON input, with the image data encoded in base64 format.

from azureml.core.model import InferenceConfig from azureml.core.webservice import AciWebservice, Webservice from azureml.core.environment import Environment env = Environment.from_conda_specification(name='azureml_env', file_path='environment.yml') inference_config = InferenceConfig(entry_script="score.py", environment=env) deployment_config = AciWebservice.deploy_configuration(cpu_cores=1, memory_gb=1) service = Model.deploy(workspace=ws, name='butterfly-model-service', models=[model], inference_config=inference_config, deployment_config=deployment_config) service.wait_for_deployment(show_output=True) print(service.scoring_uri)

Application Overview

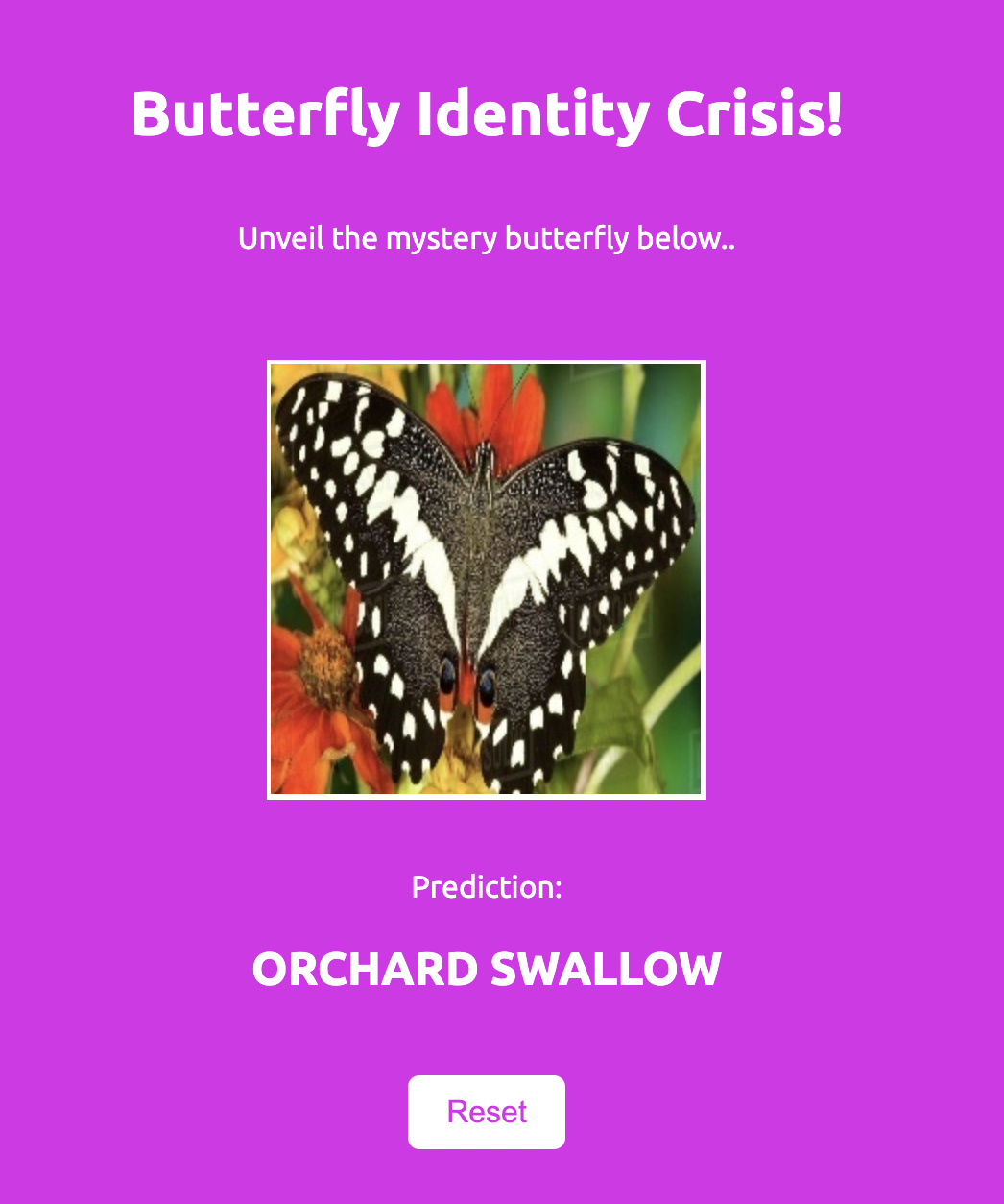

A Flask application was created to allow the image to be uploaded and classified.

The flask application is designed with two primary methods: GET and POST.

- The GET (‘/’) method renders the upload form.

- The POST (‘/predict’) method handles:

- File Validation: Ensures the file is part of the request, is below 5MB and has an allowed extension (png, jpg, jpeg).

- Image Processing and Prediction: Processes the image using the process_image function and makes a prediction using the model API endpoint.

- JSON Response: Returns a JSON response containing the predicted label and the image URL, that is displayed on the HTML template.

The index HTML and CSS page were designed with a file upload template. Upon upload, the page uses a JavaScript function to handle the form submission, call the ‘/predict’ endpoint, and display the prediction result.

Additionally, a requirements.txt file is included to specify the necessary Python packages, and the directory structure is organized in a way that is understandable to Azure for seamless deployment. This ensures that all dependencies are installed, and the application runs smoothly on Azure.

Deploying to Azure

All application files were uploaded to a GitHub repository, and Azure App Service was used for deployment. Following this tutorial guided the deployment process on Azure. Additionally, an environment variable for the storage connection string needs to be added to the Azure web app to enable connectivity to Azure Blob Storage.

Deploying the application to Azure App Service directly from GitHub offers several advantages. It facilitates Continuous Integration and Continuous Deployment (CI/CD), automating the deployment process so that any changes pushed to the GitHub repository are automatically deployed to Azure. Azure App Service provides many other advantages including easy scalability, enhanced security and s a user-friendly interface to monitor and manage the application. Leveraging these advantages, the deployment process becomes streamlined, and the application can be easily maintained and scaled as needed.